-

Notifications

You must be signed in to change notification settings - Fork 2.7k

fix: Remove fallback thoughtSignature for Gemini 3 models #10331

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: main

Are you sure you want to change the base?

Conversation

Review complete. No issues found. The latest commit correctly restores the

This matches the Gemini API docs FAQ which recommends using the fallback "in cases where it can't be avoided, e.g. providing information to the model on function calls and responses that were executed deterministically by the client, or transferring a trace from a different model."

Mention @roomote in a comment to request specific changes to this pull request or fix all unresolved issues. |

|

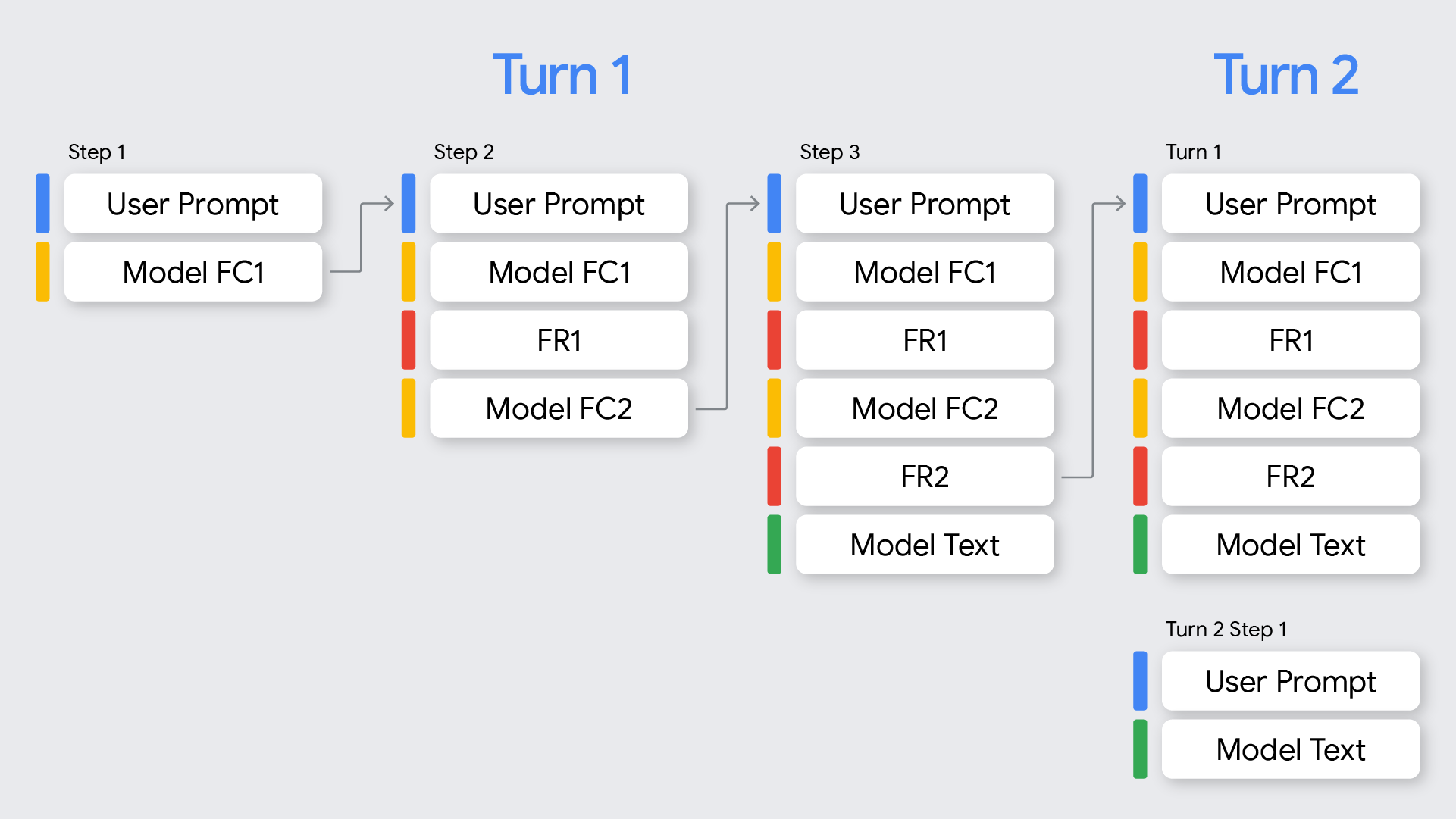

@roomote if I recall correctly the thought signature fallback was used when switching between non-thought signature producing model models to signature pro producing model models such as Gemini three. Does this change you applied still maintain that? That fallback was there for a reason. Here are some docs for context; Thought signatures are encrypted representations of the model's internal thought process and are used to preserve reasoning context across multi-step interactions. When using thinking models (such as the Gemini 3 and 2.5 series), the API may return a As a general rule, if you receive a thought signature in a model response, you should pass it back exactly as received when sending the conversation history in the next turn.When using Gemini 3 models, you must pass back thought signatures during function calling, otherwise you will get a validation error (4xx status code). This includes when using the How it worksThe graphic below visualizes the meaning of "turn" and "step" as they pertain tofunction callingin the Gemini API. A "turn" is a single, complete exchange in a conversation between a user and a model. A "step" is a finer-grained action or operation performed by the model, often as part of a larger process to complete a turn. This document focuses on handling function calling for Gemini 3 models. Refer to themodel behaviorsection for discrepancies with 2.5. Gemini 3 returns thought signatures for all model responses (responses from the API) with a function call. Thought signatures show up in the following cases:

The following table provides a visualization for multi-step function calls, combining the definitions of turns and steps with the concept of signatures introduced above: |----------|----------|-------------------------------------------------|---------------------------------|----------------------| Signatures in function calling partsWhen Gemini generates a

Sequential function calling exampleThis section shows an example of multiple function calls where the user asks a complex question requiring multiple tasks. Let's walk through a multiple-turn function calling example where the user asks a complex question requiring multiple tasks: |----------|----------|---------------------------------------------------------------------------------------|------------------------------------|----------------------| The following code illustrates the sequence in the above table. Turn 1, Step 1 (User request) Turn 1, Step 1 (Model response) Turn 1, Step 2 (User response - Sending tool outputs) Since this user turn only contains a **Turn 1, Step 2 (Model)**The model now decides to book a taxi based on the previous tool output. Turn 1, Step 3 (User - Sending tool output) To send the taxi booking confirmation, we must include signatures forALL function calls in this loop ( Parallel function calling exampleLet's walk through a parallel function calling example where the users asks

The following code illustrates the sequence in the above table. Turn 1, Step 1 (User request) Turn 1, Step 1 (Model response) Turn 1, Step 2 (User response - Sending tool outputs) We must preserve Signatures in non

|

Fixed the cross-model switching concern. The fallback |

…rios - Only use fallback when tool_use blocks exist without thoughtSignature - Fallback is needed when switching from non-thinking models to Gemini 3 - Added tests for both cross-model (fallback) and non-thinking (no signature) scenarios

Fixes the 400 "Request contains an invalid argument" error when using Gemini 3 Pro and Flash models.

Problem

Users were experiencing 400 errors with Gemini 3 Pro and Flash models (effort-based reasoning models), but not with Gemini 2.5 models (budget-based reasoning models). The error message indicated: "Request contains an invalid argument" with status "INVALID_ARGUMENT".

Root Cause

The code was using a fallback value

"skip_thought_signature_validator"for thethoughtSignaturefield when no actual signature was present in the conversation history. This fallback was being sent to Gemini 3 models, which rejected it as invalid.Solution

Modified the logic in

gemini-format.tsto only include thethoughtSignaturefield when a real signature exists from a previous response. The fallback is removed entirely, allowing the first request in a conversation (or after model switches) to work correctly.Changes

gemini-format.ts: Removed the fallback to"skip_thought_signature_validator"and only setfunctionCallSignaturewhenactiveThoughtSignatureis truthygemini-format.spec.ts: Updated test to reflect the new behavior where tool calls without an existing signature do not include thethoughtSignaturefieldTesting

View task on Roo Code Cloud